Clustering in Unsupervised Learning

In unsupervised learning, clustering is the process of dividing a set of data points into groups (called clusters) based on their similarity. The goal of clustering is to discover patterns or relationships within a dataset that might not be immediately apparent.

There are many different clustering algorithms available, each with its own set of advantages and disadvantages.

Some popular clustering algorithms include:

- k-means clustering,

- hierarchical clustering

- density-based clustering

- Model-based clustering

K-means clustering

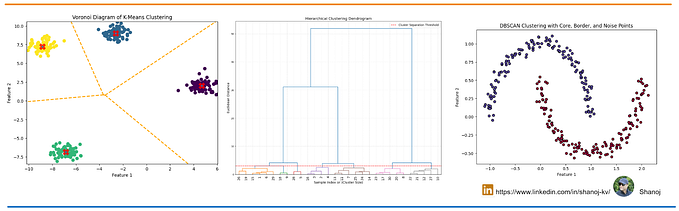

K-means clustering is a popular algorithm for dividing a set of data points into k clusters based on their similarity. The goal of k-means clustering is to minimize the sum of the squared distances between each data point and the centroid of its cluster.

Here is a more detailed explanation of how k-means clustering works:

- Initialization: The first step in k-means clustering is to initialize the centroids of the k clusters. This is typically done by selecting k data points at random from the dataset and using them as the initial centroids.

2) Assignment step: In the assignment step, each data point is assigned to the cluster with the nearest centroid. This is done using the Euclidean distance between the data point and the centroids of the different clusters.

3) Update step: In the update step, the centroid of each cluster is updated to the mean of all the data points belonging to that cluster.

4) Convergence: The algorithm continues to alternate between the assignment and update steps until the clusters stabilize, at which point the algorithm terminates. The clusters are considered to have stabilized when the assignments of the data points do not change from one iteration to the next.

K-means clustering is an iterative algorithm, meaning that it repeats the assignment and update steps until the clusters stabilize. The algorithm is guaranteed to converge, but it may not necessarily find the global minimum of the objective function. This means that the final clusters may not be the optimal solution, but they should be a good approximation.

K-means clustering is a fast and efficient algorithm that is easy to implement and can be used for a wide range of applications, such as customer segmentation, image compression, and anomaly detection. However, it is sensitive to the initial centroid assignments and may not perform well on datasets with non-globular clusters or outliers.

Hierarchical Clustering

Hierarchical clustering is a type of clustering algorithm that creates a hierarchy of clusters by either merging or dividing smaller clusters. There are two main types of hierarchical clustering: agglomerative and divisive.

- Agglomerative hierarchical clustering: Agglomerative hierarchical clustering starts with each data point in its own cluster and progressively merges the closest pairs of clusters until all the data points are contained within a single cluster. This process is guided by a similarity measure, such as the Euclidean distance or the Manhattan distance, which is used to determine the distance between clusters. The resulting hierarchy of clusters can be represented using a dendrogram, which shows the order in which the clusters were merged.

- Divisive hierarchical clustering: Divisive hierarchical clustering starts with all the data points in a single cluster and progressively divides the cluster into smaller clusters until each data point is in its own cluster. This process is also guided by a similarity measure, which is used to determine the distance between data points within a cluster.

Hierarchical clustering is a flexible algorithm that can handle non-linearly distributed data and does not require the user to specify the number of clusters in advance. However, it can be computationally expensive for large datasets and is sensitive to the choice of similarity measure.

Hierarchical clustering is often used in applications such as gene expression analysis, document clustering, and image segmentation.

Density-Based Clustering

Density-based clustering is a type of clustering algorithm that works by identifying clusters of data points that are tightly packed together and separating them from other clusters that are more dispersed. The goal of density-based clustering is to find clusters of arbitrary shape that are not well-separated from one another, and it is particularly useful for identifying clusters in datasets with noise or outliers.

One popular density-based clustering algorithm is DBSCAN (Density-Based Spatial Clustering of Applications with Noise), which works by identifying core points (data points that have a certain number of nearby points within a specified distance, called the Eps) and expanding clusters from these points. Data points that are not reachable from any core points are considered noise points and are excluded from the clusters.

DBSCAN has two main parameters: Eps, which determines the distance between points, and MinPts, which determines the minimum number of points required to form a cluster. These parameters need to be chosen carefully, as they can significantly affect the results of the algorithm.

Density-based clustering is good at identifying clusters of arbitrary shape and can handle datasets with noise and outliers, but it is sensitive to the choice of parameters and may not perform well on datasets with varying densities.

Density-based clustering is often used in applications such as anomaly detection, network intrusion detection, and image segmentation.

Model-Based Clustering

Model-based clustering is a type of clustering algorithm that assumes that the data is generated from a mixture of underlying probability distributions, and the goal is to estimate the parameters of these distributions and assign each data point to the distribution it is most likely to have come from.

One popular model-based clustering algorithm is the Gaussian mixture model (GMM), which assumes that the data is generated from a mixture of several multivariate Gaussian distributions. The GMM algorithm estimates the parameters of the Gaussian distributions (such as the mean and covariance) and assigns each data point to the distribution it is most likely to have come from based on the maximum likelihood estimate.

Model-based clustering algorithms have several advantages, including the ability to handle continuous and mixed data types, the ability to incorporate prior knowledge about the data, and the ability to estimate the uncertainty of the cluster assignments. However, they can be sensitive to the initial parameter estimates and may not perform well on datasets with non-Gaussian distributions or large amounts of noise.

Model-based clustering is often used in applications such as image segmentation, gene expression analysis, and speech recognition.

Conclusion

In conclusion, clustering is an important and commonly used technique in unsupervised learning. It allows data to be grouped into similar clusters, without the need for pre-labeled examples. Clustering algorithms can be applied to a wide range of fields and have numerous applications, including customer segmentation, image compression, and anomaly detection. However, it is important to carefully evaluate the results of a clustering algorithm and choose an appropriate method for the specific task at hand. With the increasing amount of data being generated, the use of clustering will likely continue to be a valuable tool for discovering hidden patterns and relationships in data.

Follow Me

If you find my research interesting, please don’t hesitate to connect with me My Social Profile and also check my other Articles.